The Side Effect Club: Google’s Nested Learning Solves AI Memory Loss Issues

Nested Learning: Google’s Groundbreaking Fix for Catastrophic Forgetting In Machine Learning

Estimated reading time: 5 minutes

- Nested Learning offers a new approach to combatting catastrophic forgetting.

- This paradigm structures learning models like layered Russian Dolls, enhancing resilience.

- Models can now retain knowledge of older tasks while adapting to new challenges.

- Google’s innovation signifies a key advancement in AI stability and reliability.

- Future implications could reduce the risk of cognitive amnesia in AI systems.

Table of Contents

- Understanding Google’s Nested Learning

- Nested Learning vs. Traditional Learning Paradigms

- Making Sense of The Complex

- FAQ Section

Understanding Google’s Nested Learning

Just when you think Google has run out of tricks, it pulls another rabbit out of its technology hat. The big G’s latest marvel is coined as Nested Learning. For the AI-enthusiasts, tech founders, and the developer in you who’s dared to delve into the realm of machine learning, this is indeed your arena. A new learning paradigm that aims at eliminating the arch-nemesis of your model’s efficiency – ‘Catastrophic Forgetting’.

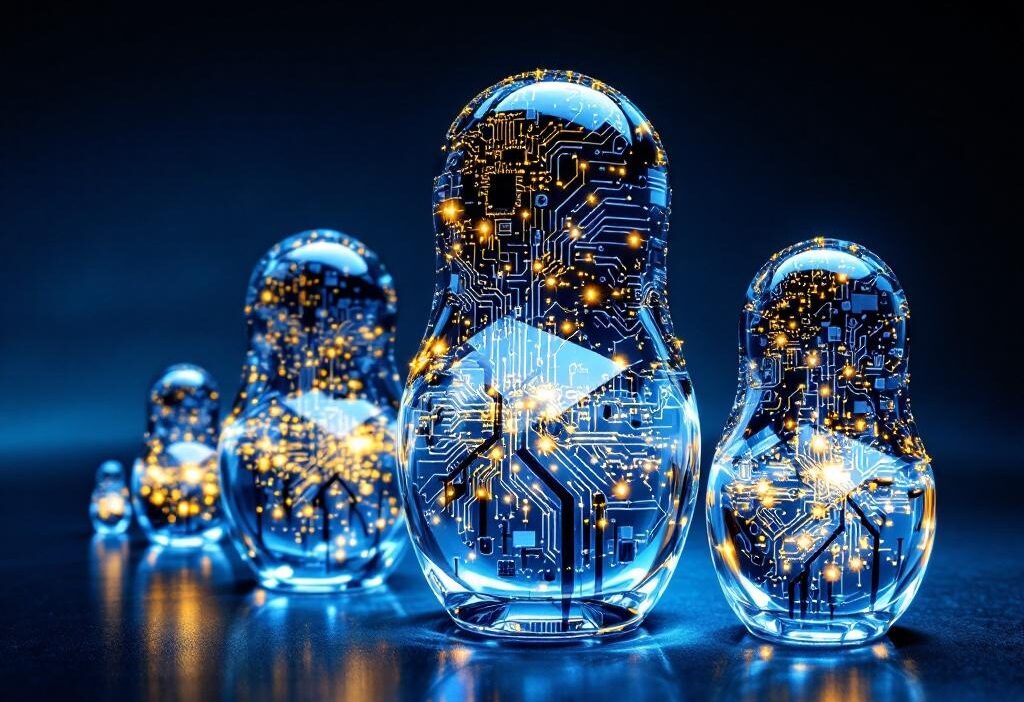

Alternating between the technical to the digestible, the concept essentially structures learning models as a cluster of smaller, sub-optimized problems, each armed with its personalized workflows. Think of it as an intricate Russian Doll styled process, where each doll (optimization problem) is strategically housed within another, preserving its unique identity, while still inherently contributing to the overall objective.

Nested Learning vs. Traditional Learning Paradigms

To put it all into perspective, tools that you’re already familiar with, like n8n or Pinecone, focus on creating workflows and processing data, respectively, within a particular domain. In contrast, Nested Learning takes a more comprehensive and layered approach to modelling, making the system considerably more resilient during the training phase. Add to that a bit of good old LangChain, merging machine learning with translation technologies, and voilà – your AI model is practically free of cognitive amnesia and all set to break barriers!

Making Sense of The Complex

At this point, you may be wondering why it matters. Consider this – as your model learns new tasks, it can forget the old ones, a phenomenon humorously and aptly tagged as “catastrophic forgetting”. It’s like your model turned up at a party (new tasks), had their share of fun, and then totally blanked out on how they ended up there in the first place (previous learning)! Nested Learning essentially ensures that the model doesn’t lose sight of previous learnings while navigating around new tasks, akin to having your cake and eating it too.

To wrap this techno ride up, Google’s Nested Learning is more than merely a new machine learning paradigm. It’s a significant stride toward making AI systems more stable and dependable. While we are yet to see its complete potential unfold in real-world applications, the method showcases a promising road ahead, potentially making catastrophic forgetting a thing of the past.

Ready to embark on the nested route?

Tweetable Takeaways:

- “Nested Learning: Google’s new stride in machine learning, making catastrophic forgetting passé.”

- “Like Russian Dolls, each with a role – that’s Google’s Nested Learning for your AI goals.”

- “With Nested Learning, your model can party away with new tasks, without blanking on the old ones.”

FAQ Section

What is Nested Learning?

Nested Learning is a new machine learning paradigm developed by Google that aims to overcome the issue of catastrophic forgetting by structuring learning models as a hierarchy of sub-optimized problems.

Why is catastrophic forgetting a problem?

Catastrophic forgetting occurs when a model loses its ability to remember previously learned tasks as it learns new information, leading to a degradation in performance on older tasks.

How does Nested Learning differ from traditional models?

Unlike traditional models, which handle tasks separately, Nested Learning integrates them within a layered structure, allowing models to retain previous memories while adapting to new tasks.

What are some examples of tools related to Nested Learning?

Tools such as n8n for workflows, Pinecone for data processing, and LangChain for translating are relevant tools that complement the Nested Learning paradigm.

Reference Links:

- Google introduces Nested Learning

- Defining Catastrophic Forgetting

- Introduction to n8n

- What is Pinecone?

- LangChain’s translation technology