Key Insights

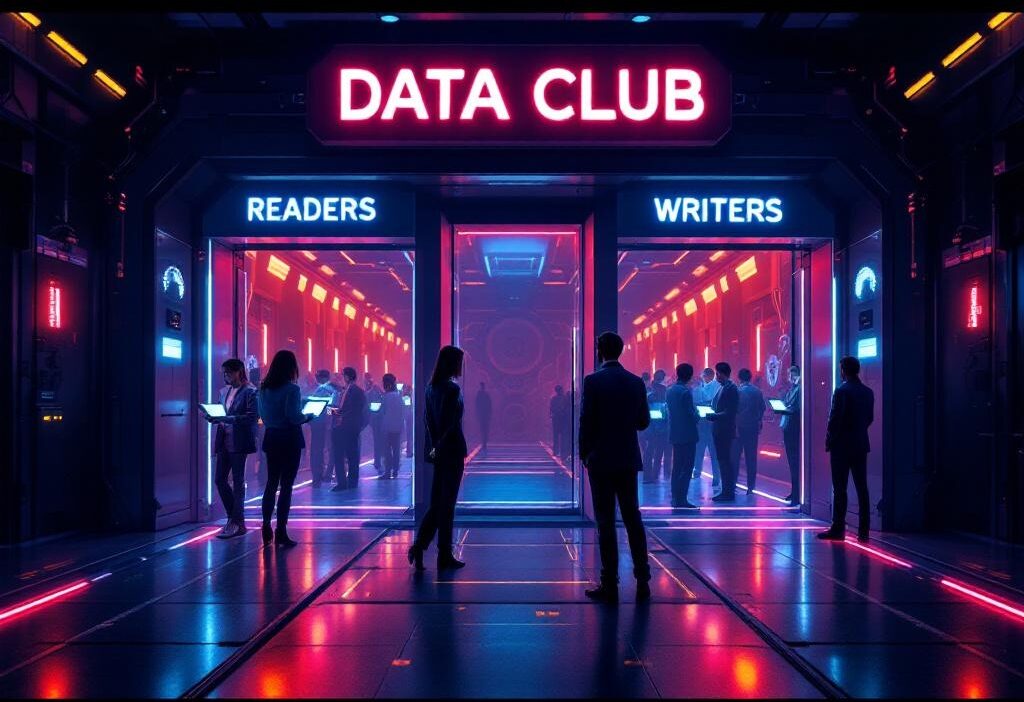

# Concurrency with Constraints When you have multiple peepers and scribes vying for your precious data, you need a guard at the gate. Readers can mingle in parallel—no state is harmed—while writers demand an all-clear zone. # Smart Locking vs. Mutex Mutexes are strict bouncers: if one thread is inside, everyone else waits outside. Readers-writers locks are like splitting the queue: multiple readers enter via the express lane, but the writer gets the VIP room once the place is empty. # Preference Matters Reader-preference yields massive throughput for analytics dashboards but can leave writers perpetually knocking. Writer-preference flips the switch—fresh writes, but impatient readers might glare. Fair locking hands out tickets, ensuring nobody camps out at the door. ## Common Misconceptions # Myth: One Lock Rules Them All Plain mutex is simple, sure, but it’s a blunt instrument. If your workload is read-heavy, you’re throwing away CPU cycles and concurrency. # Myth: Readers-Writers Is One Flavor Readers-preference, writers-preference, fair variants—each has trade-offs. Pick wrong, and you starve someone. # Myth: No Starvation by Default Assuming your lock magically handles starvation? Think again. Readers streaming in one after another can block a writer forever. ## Modern Trends # Fairness at Scale New locks rotate access like a queueing DJ, guaranteeing every thread hits the dance floor eventually. # Hardware-Level Optimizations On multi-core and NUMA setups, locks are tuned to avoid cache thrashing and false sharing—your CPUs (and your sanity) thank you. # Library Support Java’s ReentrantReadWriteLock, C++’s std::shared_mutex, Rust’s parking_lot—they’ve all got readers-writers built in. ## Examples # Databases PostgreSQL and MySQL implement table- and row-level locks: countless SELECTs can stream through until an UPDATE or INSERT gets exclusive rights. # File Systems Multiple processes can tail log files without a hiccup, but if one process writes, the kernel holds the line to prevent byte-level carnage. # AI & Automation Pipelines n8n, Pinecone, LangChain—they all rely on concurrency primitives under the hood so your workflows don’t grind to a halt under heavy reads. Got a concurrency horror story or a killer optimization tip? What’s your take?